2024

Gao, G., Liu, W., Chen, A., Geiger, A., Schölkopf, B.

GraphDreamer: Compositional 3D Scene Synthesis from Scene Graphs

The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2024 (conference) Accepted

Guo, S., Wildberger, J., Schölkopf, B.

Out-of-Variable Generalization for Discriminative Models

Proceedings of the Twelfth International Conference on Learning Representations (ICLR), May 2024 (conference) Accepted

Pace, A., Yèche, H., Schölkopf, B., Rätsch, G., Tennenholtz, G.

Delphic Offline Reinforcement Learning under Nonidentifiable Hidden Confounding

Proceedings of the Twelfth International Conference on Learning Representations (ICLR), May 2024 (conference) Accepted

Meterez*, A., Joudaki*, A., Orabona, F., Immer, A., Rätsch, G., Daneshmand, H.

Towards Training Without Depth Limits: Batch Normalization Without Gradient Explosion

Proceedings of the Twelfth International Conference on Learning Representations (ICLR), May 2024, *equal contribution (conference) Accepted

Spieler, A., Rahaman, N., Martius, G., Schölkopf, B., Levina, A.

The Expressive Leaky Memory Neuron: an Efficient and Expressive Phenomenological Neuron Model Can Solve Long-Horizon Tasks

Proceedings of the Twelfth International Conference on Learning Representations (ICLR), May 2024 (conference) Accepted

Open X-Embodiment Collaboration ( incl. Guist, S., Schneider, J., Schölkopf, B., Büchler, D. ).

Open X-Embodiment: Robotic Learning Datasets and RT-X Models

IEEE International Conference on Robotics and Automation (ICRA), May 2024 (conference) Accepted

Jin, Z., Liu, J., Lyu, Z., Poff, S., Sachan, M., Mihalcea, R., Diab*, M., Schölkopf*, B.

Can Large Language Models Infer Causation from Correlation?

Proceedings of the Twelfth International Conference on Learning Representations (ICLR), May 2024, *equal supervision (conference) Accepted

Donhauser, K., Lokna, J., Sanyal, A., Boedihardjo, M., Hönig, R., Yang, F.

Certified private data release for sparse Lipschitz functions

27th International Conference on Artificial Intelligence and Statistics (AISTATS), May 2024 (conference) Accepted

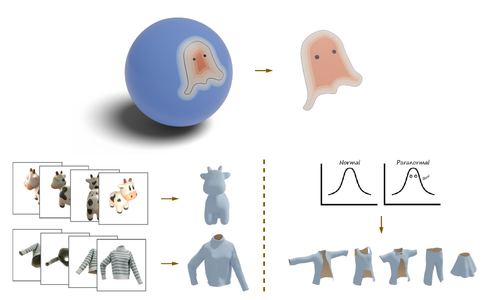

Liu, Z., Feng, Y., Xiu, Y., Liu, W., Paull, L., Black, M. J., Schölkopf, B.

Ghost on the Shell: An Expressive Representation of General 3D Shapes

In Proceedings of the Twelfth International Conference on Learning Representations, The Twelfth International Conference on Learning Representations, May 2024 (inproceedings) Accepted

Schneider, J., Schumacher, P., Guist, S., Chen, L., Häufle, D., Schölkopf, B., Büchler, D.

Identifying Policy Gradient Subspaces

Proceedings of the Twelfth International Conference on Learning Representations (ICLR), May 2024 (conference) Accepted

Khromov*, G., Singh*, S. P.

Some Intriguing Aspects about Lipschitz Continuity of Neural Networks

Proceedings of the Twelfth International Conference on Learning Representations (ICLR), May 2024, *equal contribution (conference) Accepted

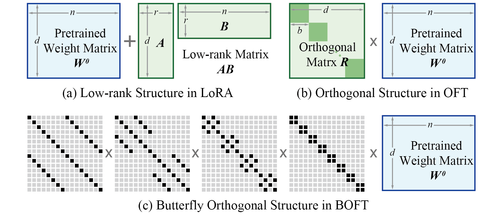

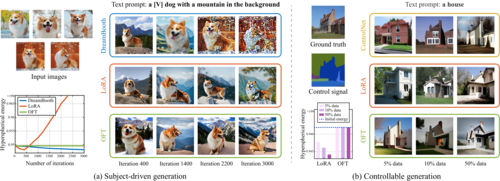

Liu, W., Qiu, Z., Feng, Y., Xiu, Y., Xue, Y., Yu, L., Feng, H., Liu, Z., Heo, J., Peng, S., Wen, Y., Black, M. J., Weller, A., Schölkopf, B.

Parameter-Efficient Orthogonal Finetuning via Butterfly Factorization

In Proceedings of the Twelfth International Conference on Learning Representations, The Twelfth International Conference on Learning Representations, May 2024 (inproceedings) Accepted

Pan, H., Schölkopf, B.

Skill or Luck? Return Decomposition via Advantage Functions

Proceedings of the Twelfth International Conference on Learning Representations (ICLR), May 2024 (conference) Accepted

Imfeld*, M., Graldi*, J., Giordano*, M., Hofmann, T., Anagnostidis, S., Singh, S. P.

Transformer Fusion with Optimal Transport

Proceedings of the Twelfth International Conference on Learning Representations (ICLR), May 2024, *equal contribution (conference) Accepted

Lorch, L., Krause*, A., Schölkopf*, B.

Causal Modeling with Stationary Diffusions

27th International Conference on Artificial Intelligence and Statistics (AISTATS), May 2024, *equal supervision (conference) Accepted

Yao, D., Xu, D., Lachapelle, S., Magliacane, S., Taslakian, P., Martius, G., von Kügelgen, J., Locatello, F.

Multi-View Causal Representation Learning with Partial Observability

Proceedings of the Twelfth International Conference on Learning Representations (ICLR), May 2024 (conference) Accepted

Theus, A., Geimer, O., Wicke, F., Hofmann, T., Anagnostidis, S., Singh, S. P.

Towards Meta-Pruning via Optimal Transport

Proceedings of the Twelfth International Conference on Learning Representations (ICLR), May 2024 (conference) Accepted

Lin*, J. A., Padhy*, S., Antorán*, J., Tripp, A., Terenin, A., Szepesvari, C., Hernández-Lobato, J. M., Janz, D.

Stochastic Gradient Descent for Gaussian Processes Done Right

Proceedings of the Twelfth International Conference on Learning Representations (ICLR), May 2024, *equal contribution (conference) Accepted

Hu, Y., Pinto, F., Yang, F., Sanyal, A.

PILLAR: How to make semi-private learning more effective

2nd IEEE Conference on Secure and Trustworthy Machine Learning (SaTML), April 2024 (conference) Accepted

Kottapalli, S. N. M., Schlieder, L., Song, A., Volchkov, V., Schölkopf, B., Fischer, P.

Polarization-based non-linear deep diffractive neural networks

AI and Optical Data Sciences V, PC12903, pages: PC129030B, (Editors: Ken-ichi Kitayama and Volker J. Sorger), SPIE, January 2024 (conference)

Song, A., Kottapalli, S. N. M., Schölkopf, B., Fischer, P.

Multi-channel free space optical convolutions with incoherent light

AI and Optical Data Sciences V, PC12903, pages: PC129030I, (Editors: Ken-ichi Kitayama and Volker J. Sorger), SPIE, January 2024 (conference)

Tsirtsis, S., Tabibian, B., Khajehnejad, M., Singla, A., Schölkopf, B., Gomez-Rodriguez, M.

Optimal Decision Making Under Strategic Behavior

Management Science, 2024, Published Online (article) In press

2023

Chaudhuri, A., Mancini, M., Akata, Z., Dutta, A.

Transitivity Recovering Decompositions: Interpretable and Robust Fine-Grained Relationships

Advances in Neural Information Processing Systems 36 (NeurIPS 2023), 37th Annual Conference on Neural Information Processing Systems, December 2023 (conference) Accepted

Eastwood*, C., Singh*, S., Nicolicioiu, A. L., Vlastelica, M., von Kügelgen, J., Schölkopf, B.

Spuriosity Didn’t Kill the Classifier: Using Invariant Predictions to Harness Spurious Features

Advances in Neural Information Processing Systems 36 (NeurIPS 2023), 36, pages: 18291-18324, (Editors: A. Oh and T. Neumann and A. Globerson and K. Saenko and M. Hardt and S. Levine), Curran Associates, Inc., 37th Annual Conference on Neural Information Processing Systems, December 2023, *equal contribution (conference)

Jin*, Z., Chen*, Y., Leeb*, F., Gresele*, L., Kamal, O., Lyu, Z., Blin, K., Gonzalez, F., Kleiman-Weiner, M., Sachan, M., Schölkopf, B.

CLadder: A Benchmark to Assess Causal Reasoning Capabilities of Language Models

Advances in Neural Information Processing Systems 36 (NeurIPS 2023), 36, pages: 31038-31065, (Editors: A. Oh and T. Neumann and A. Globerson and K. Saenko and M. Hardt and S. Levine), Curran Associates, Inc., 37th Annual Conference on Neural Information Processing Systems, December 2023, *main contributors (conference)

Gao*, R., Deistler*, M., Macke, J. H.

Generalized Bayesian Inference for Scientific Simulators via Amortized Cost Estimation

Advances in Neural Information Processing Systems 36 (NeurIPS 2023), 36, pages: 80191-80219, (Editors: A. Oh and T. Neumann and A. Globerson and K. Saenko and M. Hardt and S. Levine), Curran Associates, Inc., 37th Annual Conference on Neural Information Processing Systems, December 2023, *equal contribution (conference)

Coda-Forno, J., Binz, M., Akata, Z., Botvinick, M., Wang, J. X., Schulz, E.

Meta-in-context learning in large language models

Advances in Neural Information Processing Systems 36 (NeurIPS 2023), 37th Annual Conference on Neural Information Processing Systems, December 2023 (conference) Accepted

Kuznetsova*, R., Pace*, A., Burger*, M., Yèche, H., Rätsch, G.

On the Importance of Step-wise Embeddings for Heterogeneous Clinical Time-Series

Proceedings of the 3rd Machine Learning for Health Symposium (ML4H) , 225, pages: 268-291, Proceedings of Machine Learning Research, (Editors: Hegselmann, S.and Parziale, A. and Shanmugam, D. and Tang, S. and Asiedu, M. N. and Chang, S. and Hartvigsen, T. and Singh, H.), PMLR, December 2023, *equal contribution (conference)

Qiu*, Z., Liu*, W., Feng, H., Xue, Y., Feng, Y., Liu, Z., Zhang, D., Weller, A., Schölkopf, B.

Controlling Text-to-Image Diffusion by Orthogonal Finetuning

Advances in Neural Information Processing Systems 36 (NeurIPS 2023), 36, pages: 79320-79362, (Editors: A. Oh and T. Neumann and A. Globerson and K. Saenko and M. Hardt and S. Levine), Curran Associates, Inc., 37th Annual Conference on Neural Information Processing Systems, December 2023, *equal contribution (conference)

Guo*, S., Tóth*, V., Schölkopf, B., Huszár, F.

Causal de Finetti: On the Identification of Invariant Causal Structure in Exchangeable Data

Advances in Neural Information Processing Systems 36 (NeurIPS 2023), 36, pages: 36463-36475, (Editors: A. Oh and T. Neumann and A. Globerson and K. Saenko and M. Hardt and S. Levine), Curran Associates, Inc., 37th Annual Conference on Neural Information Processing Systems, December 2023, *equal contribution (conference)

Lin*, J. A., Antorán*, J., Padhy*, S., Janz, D., Hernández-Lobato, J. M., Terenin, A.

Sampling from Gaussian Process Posteriors using Stochastic Gradient Descent

Advances in Neural Information Processing Systems 36 (NeurIPS 2023), 36, pages: 36886-36912, (Editors: A. Oh and T. Neumann and A. Globerson and K. Saenko and M. Hardt and S. Levine), Curran Associates, Inc., 37th Annual Conference on Neural Information Processing Systems, December 2023, *equal contribution (conference)

Salewski, L., Alaniz, S., Rio-Torto, I., Schulz, E., Akata, Z.

In-Context Impersonation Reveals Large Language Models’ Strengths and Biases

Advances in Neural Information Processing Systems 36 (NeurIPS 2023), 37th Annual Conference on Neural Information Processing Systems, December 2023 (conference) Accepted

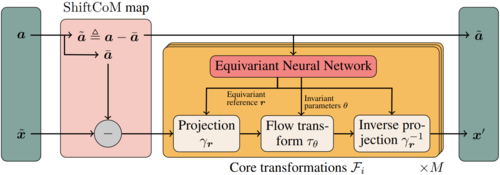

Midgley*, L. I., Stimper*, V., Antorán*, J., Mathieu*, E., Schölkopf, B., Hernández-Lobato, J. M.

SE(3) Equivariant Augmented Coupling Flows

Advances in Neural Information Processing Systems 36 (NeurIPS 2023), 36, pages: 79200-79225, (Editors: A. Oh and T. Neumann and A. Globerson and K. Saenko and M. Hardt and S. Levine), Curran Associates, Inc., 37th Annual Conference on Neural Information Processing Systems, December 2023, *equal contribution (conference)

Wildberger*, J., Dax*, M., Buchholz*, S., Green, S. R., Macke, J. H., Schölkopf, B.

Flow Matching for Scalable Simulation-Based Inference

Advances in Neural Information Processing Systems 36 (NeurIPS 2023), 36, pages: 16837-16864, (Editors: A. Oh and T. Neumann and A. Globerson and K. Saenko and M. Hardt and S. Levine), Curran Associates, Inc., 37th Annual Conference on Neural Information Processing Systems, December 2023, *equal contribution (conference)

Buchholz*, S., Rajendran*, G., Rosenfeld, E., Aragam, B., Schölkopf, B., Ravikumar, P.

Learning Linear Causal Representations from Interventions under General Nonlinear Mixing

Advances in Neural Information Processing Systems 36 (NeurIPS 2023), 36, pages: 45419-45462, (Editors: A. Oh and T. Neumann and A. Globerson and K. Saenko and M. Hardt and S. Levine), Curran Associates, Inc., 37th Annual Conference on Neural Information Processing Systems, December 2023, *equal contribution (conference)

Munkhoeva, M., Oseledets, I.

Neural Harmonics: Bridging Spectral Embedding and Matrix Completion in Self-Supervised Learning

Advances in Neural Information Processing Systems 36 (NeurIPS 2023), 36, pages: 60712-60723, (Editors: A. Oh and T. Neumann and A. Globerson and K. Saenko and M. Hardt and S. Levine), Curran Associates, Inc., 37th Annual Conference on Neural Information Processing Systems, December 2023 (conference)

Lorch, L., Krause*, A., Schölkopf*, B.

Causal Modeling with Stationary Diffusions

Causal Representation Learning Workshop at NeurIPS 2023, December 2023, *equal supervision (conference)

Liang, W., Kekić, A., von Kügelgen, J., Buchholz, S., Besserve, M., Gresele*, L., Schölkopf*, B.

Causal Component Analysis

Advances in Neural Information Processing Systems 36 (NeurIPS 2023), 36, pages: 32481-32520, (Editors: A. Oh and T. Neumann and A. Globerson and K. Saenko and M. Hardt and S. Levine), Curran Associates, Inc., 37th Annual Conference on Neural Information Processing Systems, December 2023, *shared last author (conference)

Park, J., Buchholz, S., Schölkopf, B., Muandet, K.

A Measure-Theoretic Axiomatisation of Causality

Advances in Neural Information Processing Systems 36 (NeurIPS 2023), 36, pages: 28510-28540, (Editors: A. Oh and T. Neumann and A. Globerson and K. Saenko and M. Hardt and S. Levine), Curran Associates, Inc., 37th Annual Conference on Neural Information Processing Systems, December 2023 (conference)

von Kügelgen, J., Besserve, M., Liang, W., Gresele, L., Kekić, A., Bareinboim, E., Blei, D., Schölkopf, B.

Nonparametric Identifiability of Causal Representations from Unknown Interventions

Advances in Neural Information Processing Systems 36 (NeurIPS 2023), 36, pages: 48603-48638, (Editors: A. Oh and T. Neumann and A. Globerson and K. Saenko and M. Hardt and S. Levine), Curran Associates, Inc., 37th Annual Conference on Neural Information Processing Systems, December 2023 (conference)

Confavreux*, B., Ramesh*, P., Goncalves, P. J., Macke, J. H., Vogels, T. P.

Meta-learning families of plasticity rules in recurrent spiking networks using simulation-based inference

Advances in Neural Information Processing Systems 36 (NeurIPS 2023), 36, pages: 13545-13558, (Editors: A. Oh and T. Neumann and A. Globerson and K. Saenko and M. Hardt and S. Levine), Curran Associates, Inc., 37th Annual Conference on Neural Information Processing Systems, December 2023, *equal contribution (conference)

Tifrea*, A., Yüce*, G., Sanyal, A., Yang, F.

Can semi-supervised learning use all the data effectively? A lower bound perspective

Advances in Neural Information Processing Systems 36 (NeurIPS 2023), 36, pages: 21960-21982, (Editors: A. Oh and T. Neumann and A. Globerson and K. Saenko and M. Hardt and S. Levine), Curran Associates, Inc., 37th Annual Conference on Neural Information Processing Systems, December 2023, *equal contribution (conference)

Hupkes, D., Giulianelli, M., Dankers, V., Artetxe, M., Elazar, Y., Pimentel, T., Christodoulopoulos, C., Lasri, K., Saphra, N., Sinclair, A., Ulmer, D., Schottmann, F., Batsuren, K., Sun, K., Sinha, K., Khalatbari, L., Ryskina, M., Frieske, R., Cotterell, R., Jin, Z.

A taxonomy and review of generalization research in NLP

Nature Machine Intelligence, 5(10):1161-1174, October 2023 (article)

Donhauser, K., Lokna, J., Sanyal, A., Boedihardjo, M., Hönig, R., Yang, F.

Certified private data release for sparse Lipschitz functions

TPDP 2023 - Theory and Practice of Differential Privacy, September 2023 (conference)

Pinto, F., Hu, Y., Yang, F., Sanyal, A.

How to make semi-private learning more effective

TPDP 2023 - Theory and Practice of Differential Privacy, September 2023 (conference)

Hawkins-Hooker, A., Visonà, G., Narendra, T., Rojas-Carulla, M., Schölkopf, B., Schweikert, G.

Getting personal with epigenetics: towards individual-specific epigenomic imputation with machine learning

Nature Communications, 14(1), August 2023 (article)

Kladny, K., von Kügelgen, J., Schölkopf, B., Muehlebach, M.

Causal Effect Estimation from Observational and Interventional Data Through Matrix Weighted Linear Estimators

Proceedings of the Thirty-Ninth Conference on Uncertainty in Artificial Intelligence (UAI), 216, pages: 1087-1097, Proceedings of Machine Learning Research, (Editors: Evans, Robin J. and Shpitser, Ilya), PMLR, August 2023 (conference)

Rangnekar, V., Upadhyay, U., Akata, Z., Banerjee, B.

USIM-DAL: Uncertainty-aware Statistical Image Modeling-based Dense Active Learning for Super-resolution

Proceedings of the Thirty-Ninth Conference on Uncertainty in Artificial Intelligence (UAI), 216, pages: 1707-1717, (Editors: Evans, Robin J. and Shpitser, Ilya), PMLR, August 2023 (conference)

Glöckler, M., Deistler, M., Macke, J. H.

Adversarial robustness of amortized Bayesian inference

Proceedings of 40th International Conference on Machine Learning (ICML) , 202, pages: 11493-11524, Proceedings of Machine Learning Research, (Editors: A. Krause, E. Brunskill, K. Cho, B. Engelhardt, S. Sabato and J. Scarlett), PMLR, July 2023 (conference)

Immer, A., Schultheiss, C., Vogt, J. E., Schölkopf, B., Bühlmann, P., Marx, A.

On the Identifiability and Estimation of Causal Location-Scale Noise Models

Proceedings of the 40th International Conference on Machine Learning (ICML), 202, pages: 14316-14332, Proceedings of Machine Learning Research, (Editors: A. Krause, E. Brunskill, K. Cho, B. Engelhardt, S. Sabato and J. Scarlett), PMLR, July 2023 (conference)